These examples were created for a meeting of IO graduate students at the University of Minnesota on 5 October 2016. They are illustrative rather than optimized (not that I can necessarily produce optimal scraping code).

This guide presumes knowledge of inspector tools in any standard browser as well as basic familiarity with html and, of course, python. The latter is touched upon in my previous post. I think it will be most useful if the reader attempts to run the code on their own computer along with this guide.

Author: Matt Shapiro (shap0157@umn.edu)

Selenium Demonstration¶

Selenium browser automation is, in my opinion, a last resort for large-need scraping. The list of cons in dealing with it is extensive (well, I am sure there are more):

- High memory overhead / harder to parallelize

- More prone to program crashes

It becomes a necessity, however, in a few cases I have encountered:

- Form-type submission to generate documents has indiscernible API

- Full page source only available after execution of javascript functions

Test Case¶

The goal is to collect historical lease records on federal lands in North Dakota from the BLM website. The difficulty from scraping it falls under the first category mentioned above; the lease data is generated on the fly following a form submission on the website. The video below illustrates what the final process should like like.

This tutorial will walk through the basics of Selenium and how to automate the download of these lease records. It will not go through the process of parsing the data out of the downloaded records.

Preparation¶

As shown in the video, automated selenium looks as if an invisible human is navigating through the page. The user's code tells selenium which web page elements to focus on and then how to interact with that element. These interactions include clicking, filling out text, etc.

Preparing to automate with selenium then comprises the following steps:

- Identify the steps which need to be automated

- For each step figure out how to identify the web page elements in selenium

- Plan out complications in the code

Preparation - Part 1¶

Consulting with the video above, the automation steps are pretty obvious:

- Start at https://rptapp.blm.gov/landandresourcesreports/rptapp/menu.cfm?appCd=2

- Click Pub CR Serial Register Page under Public Case Recordation Reports

- On the new page, select the radio button New Format Serial Entry

- Click Select Criteria

- On the new page, select ND North Dakota from the drop down list next to Geo State

- Select M from the drop down list next to Land Office

- Fill in the desired serial number in the Serial Number entry box; note some serial numbers not covered in the tutorial would also require prefix and suffix submissions

- Click Enter Value

- Select Okay in the alert box

- Click Run Report

- Select Okay in the alert box

- Switch to the new window

- Download the data page

- Close the page

- Return to the original window

- Clear the entries by selecting Clear All

- Repeat steps 7 through 16 as needed

At steps 5 through 9, multiple serial numbers could be submitted but will not be for the purposes of this demo.

Preparation - Part 2¶

There are a lot directions from step 1 on which we need to direct selenium. One easy way to point selenium to elements in a web page is xpath directions. A nice tutorial of basic xpath syntax can be found here.

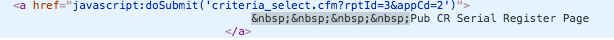

For example the html element surrounding the text of Pub CR Serial Register Page is

Through a bit of work I found the 'href' attribute contains text unique to the desired html element. We can use xpath to point to that element in the page via the following example, //a[contains(@href,"Id=3&appCd=2")]:

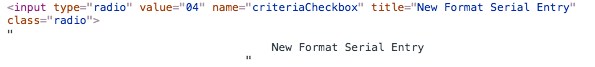

The above syntax works for inexact attribute matching. Step 3, selecting the radio button for New Format Serial Entry demonstrates a case for exact matching.

The title attribute should be unique, and we point xpath to this element via //input[@title="New Format Serial Entry"]:

Once you can identify these unique elements quickly, finishing off step 2 of the preparation is a breeze. The actual code later in this tutorial will label these identifying steps by the numbers used in the section on the first preparation step.

Preparation - Part 3¶

This bit of preparation is easier once one has more experience with selenium's idiosyncracies. To save a bit of time, here are challenges I originally ran into when coding this scraper along with the way we will have selenium handle them. Some are rather mundane.

- The automator's pop-up blocker is on by default and blocks report generation: selenium allows browser preference tweaks

- The desired html was hidden by an iframe: selenium allows focus to switch to an iframe within a webpage and access to its elements

- Alert pop ups block continuing the automation: selenium natively handles alert boxes

- The report generated appears in a new tab/window: selenium easily handles window switching

- Report generation varies in time, and the code may crash if it tries to continue too early: selenium allows for advanced progression controls. Here I noticed the browser has 3 windows when generating the report. The third goes away when the report has finished generating. I create a control condition to wait for only 2 windows.

Using Selenium¶

Before diving into the scraping code, a small demonstration of selenium illustrates its basic capabilities.

from selenium import webdriver

The primary object you manage is a browser instance created, here called "sess." There are multiple browser options available, including Firefox, Chrome, and even headless browsers if you are working without an a display. We will be using Chrome.

Running the following command should cause a new chrome browser instance to pop up on your computer.

sess = webdriver.Chrome()

There is a lot to play around with, but the basic command is to open a website via our browser. The get method opens the specified webpage.

sess.get('http://www.google.com')

Now, let us do the impossible and find "Donald Trump's dignity" with a google search. We need to

- Point the webdriver to the text input box

- Input the text

- Hit enter to complete the search

# Point webdriver

q = sess.find_element_by_xpath('//input[@title="Search"]')

# Input text

q.send_keys("Donald Trump's dignity")

# 'Hit Enter'

q.submit()

The exercise failed, but it shows off how easy automation can be with selenium.

sess.quit()

Full Example¶

The code throughout this section will download the mineral lease data for five property areas in North Dakota.

from selenium import webdriver

from selenium.webdriver.support.ui import WebDriverWait

from bs4 import BeautifulSoup as bs

import pandas as pd

import time

import re

First, a bit of pre-code to put together the list of serial numbers:

# Extract list of serial numbers to look up

fedleases = pd.read_csv('files/leasesections.csv')

serial = fedleases.serial_number.dropna().drop_duplicates()

serial_list = [serial.iloc[x].replace(' ', '') for x in range(serial.shape[0])]

serial_list = serial_list[:5] # Limit demo to first five serial numbers

serial_list

Second, useful functions for the repeated navigation steps and the window-counting timing control mentioned in preparation step 3.

class window_count(object):

"""

Checks for the number of windows in the browser

For use with WebDriverWait's until condition

"""

def __init__(self, count):

self.windowcheck = count

def __call__(self, driver):

return self.windowcheck == len(driver.window_handles)

def navigation(caseid):

"""

This function takes a serial number from serial_list and

1. Parses the serial number for input in Prep Part 1 steps 5 through 7

2. Clears the previous serial number entries

3. Inputs the new serial number data

4. Generates the report for the serial number

5. The code concludes by switching to the window with the report

"""

# Parse serial number into the components input in steps 5 through 7

number = re.findall('\d+', caseid)[0]

office = re.findall('ND(\D+)', caseid)[0]

if re.match('\A00', number) and len(number) > 6:

prefix = '0'

else:

prefix = ''

if re.match('\A00', number) and len(number) > 6:

number = number[1:]

if re.search('\D+?\Z', caseid):

suffix = re.findall('\D+?\Z', caseid)[0]

else:

suffix = ''

time.sleep(5)

# Step 16

q = sess.find_element_by_xpath('//input[@value="Clear All"]')

q.click()

q = sess.find_element_by_xpath('//input[@value="Clear"]')

q.click()

# Step 5

q = sess.find_element_by_xpath('//option[@value="ND"]')

q.click()

# Step 6

time.sleep(1)

landoffice = '//option[@value="{}"]'.format(office)

q = sess.find_element_by_xpath(landoffice)

q.click()

# Step 7

q = sess.find_element_by_xpath('//input[@name="prefixInputE"]')

q.send_keys(prefix)

# Step 7

q = sess.find_element_by_xpath('//input[@name="snInputE"]')

q.send_keys(number)

# Step 7

q = sess.find_element_by_xpath('//input[@name="suffixInputE"]')

q.send_keys(suffix)

# Step 8

q = sess.find_element_by_xpath('//input[@value="Enter Value"]')

q.click()

# Step 9

time.sleep(1)

q = sess.switch_to_alert()

q.accept()

# Step 10

q = sess.find_element_by_xpath('//input[@value="Run Report"]')

q.click()

# Step 11

time.sleep(1)

q = sess.switch_to_alert()

q.accept()

# This code blocks the code from continuing until there are only 2 windows

# after report generation

time.sleep(4)

try:

WebDriverWait(sess, 100).until(window_count(2))

finally:

# Step 12

sess.switch_to_window(sess.window_handles[1])

Finally, the main code block starting up the webdriver and working through steps 1-4 from the first preparation stage. The code then loops through the desired serial numbers and downloads the data.

blmhome = 'http://www.blm.gov/landandresourcesreports/rptapp/menu.cfm?appCd=2'

# Set up chrome options to disable pop up blocking

cp = webdriver.ChromeOptions()

cp.add_argument('disable-popup-blocking')

sess = webdriver.Chrome(chrome_options=cp)

sess.get(blmhome) # Step 1

# Because we need to switch windows later to get the report, we need to save

# selenium's ID for our main page

windowid = sess.current_window_handle

# Step 2

q = sess.find_element_by_xpath('//a[contains(@href,"Id=3&appCd=2")]')

q.click()

time.sleep(1)

# Step 3

q = sess.find_element_by_xpath('//input[@title="New Format Serial Entry"]')

q.click()

# Step 4

q = sess.find_element_by_xpath('//input[@value="Select Criteria"]')

q.click()

time.sleep(2)

Steps 5 forward are embedded in the loop over the serial numbers and download the data. Technically reports are several pages long and require further manipulation to download each page. Here I just download the first page for demonstration.

for case in serial_list:

# This function clears any existing data on the page (none at first)

# And moves us to step 12

navigation(case)

# The content of the new page is imbedded in an iframe entitled "iServerFrame"

sess.switch_to_frame('iServerFrame')

# Save the page source for later parsing

with open('SN_{}.html'.format(case), 'w') as f:

f.write(sess.page_source.encode('utf-8'))

# Close the report window

sess.close()

# Switch back to the main window

sess.switch_to_window(windowid)

sess.quit()

Final Notes¶

I have found that selenium, more than more direct scraping methods is prone to crashing. These errors make it especially important to have a system to monitor that everything is working correctly when not under direct observation. Some extra steps I took in the real code that I left out here include:

- Download log

- Emails to my account if the program crashed

- Checks embedded within the program to deal with common errors without a crash, e.g. no data for a particular serial number or if the service stopped working

Comments